Using a local LLM as AI Code Assist

If you want to use AI for coding, there are plenty of tools to choose from. Popular options include GitHub Copilot, Claude Code, and Gemini. But what if you’d rather not send your code to external services? Now that computers are becoming more powerful, is it possible to run a large language model locally and use it as your own coding assistant?

Two weeks ago I joined an internal AI Bol hackathon, where we had the chance to experiment with different AI ideas. Since I had already been wondering whether it’s viable to run a local LLM as a coding assistant lately, the timing couldn’t have been better to try it out. Running a model locally was new to me, so I started by asking GPT-5 to explain the basics. Once I understood what I needed, the easiest way to get started is by using a lightweight runner like Ollama, which supports Linux, macOS, and Windows. If you’re on a MacBook, you can just install it with Homebrew:

brew install ollamaInstalling Ollama itself does not take that long, but downloading the models can take a while because they can be quite large. Before you grab a model, it helps to understand how they’re categorized. You’ll often see labels like '13B Q8'. Two things matter here: the size and the quantization level. The size, shown with a B, refers to the number of parameters in billions. The quantization level, shown with a Q, tells you how many bits each parameter uses after compression. You don’t have to dive deep into the theory, but the idea is simple. Lower numbers run faster and fit on smaller hardware, though you lose a bit of accuracy. Higher numbers take more resources and respond slower, but the output quality improves.

After reading more about running Ollama on my Macbook M4 Pro with 48 GB of memory[1], I figured I’d try the qwen3:32b model. So I installed it:

ollama pull qwen3:32band tried to do a simple test:

ollama run qwen3:32b "Can you explain what 2 + 2 does?"Within seconds, it gave me a solid answer[2]. Cool, so running a model locally is not that hard! If you want a proper coding-assistant experience though, you need to feed the model plenty of context. Doing that by hand gets tiring fast, so you’ll want either a terminal tool or an IDE plugin that can bundle up the context for you. Since I usually prefer CLI tools over half-baked IDE plugins, I gave OpenCode a try:

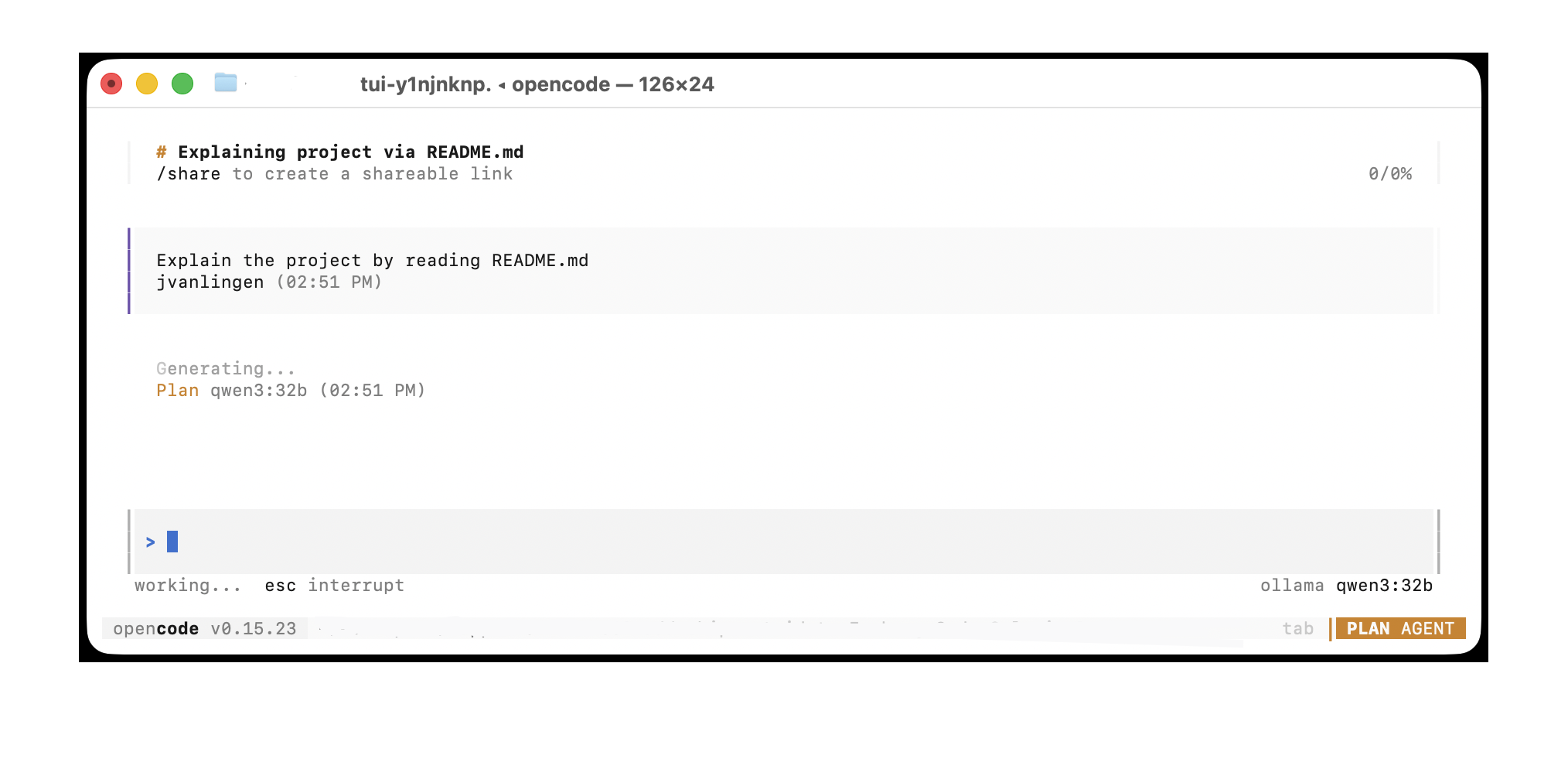

brew install opencodeThen I moved on to a real project I’m working on, which includes a significant amount of frontend and backend code, and prompted the following question:

I took over 3 minutes to get an answer! So it became clear that running this model isn’t practical unless you have a truly powerful machine. Thus, I tried the smaller mistral:7B model. This model gave me answers within the 15 seconds range. Still a bit slow, but at least workable for the experiment I was doing.

I picked a very simple Jira task from the backlog, wrote a prompt similar to what I would use with a remote LLM, and started working. The experience, however, was worse than I expected. The LLM didn’t seem to fully grasp the context of my code. I wasn’t sure whether the issue was the model itself or OpenCode. To check if the model had any programming knowledge, I asked it two questions: “Explain currying to me” and “Explain the concept of dependency injection and how the Spring Framework implements it.” Both answers were correct and returned in under five seconds.

Tools like Gemini CLI or the Cursor IDE handle a lot of invisible work behind the scenes. A key part of that is generating a solid pre-prompt that gives the LLM the right project context. So maybe the issue was OpenCode after all, and its pre-prompting just wasn’t on the same level as other tools. To test that idea, I kept digging. A Reddit post eventually pointed me to ProxyAI, the 'leading JetBrains-first, open-source AI copilot' plugin. It has native Ollama support, which makes the setup straightforward.

And indeed, the results were a little bit better! That confirmed that the tooling around the model is just as important as the model itself. Still, the workflow didn’t come close to what remote LLMs offer. I decided to give it one more try. Earlier this year, DeepSeek caught the world off guard, so I figured it was worth testing one of their models. I picked deepseek-coder:6.7b, a relatively small model that should at least improve response times. Interestingly, it actually outperformed the somewhat larger mistral:7B model I had been using. But even then, it still wasn’t good enough.

One thing remained to be figured out: is it really the model that makes the difference? To answer this question, I connected ProxyAI to Google’s gemini-pro-2.5 model. Suddenly, the results matched what I would have achieved using the Gemini CLI. This clearly shows that the model does the heavy lifting, while the surrounding tools mainly provide the necessary context.

Where does this leave us? Running a LLM locally is definitely possible, but using it as a coding assistant isn’t quite practical yet. Larger models require a very powerful machine with plenty of memory to deliver useful responses. I do believe that, in the future, this will become a viable solution. For now, though, it’s best to rely on remote LLMs. Or perhaps, even better, stick with your own brain!