The product of Software Development

Over the last few years, the software industry has been in the grasp of an all-overshadowing discussion on the use of AI and LLMs. What started as a fun experiment with ChatGPT showing this “intelligence” was sometimes capable of writing somewhat coherent compiling code has quickly grown into a plethora of LLMs, AIs, IDE plugins and tools built upon AIs influencing (or taking over) every aspect of software development.

While the discussions do address the value and shortcomings of the tools I find myself pondering the effect this will have on our profession more.

The capability of these tools has positively surprised me. I’ve been experimenting with different AI tools and the experience of an immediate productivity gain is undeniable. Helping me understand (and often fix) errors, writing boilerplate code, sometimes even correctly implementing complex features or generating entire test suites feels like it’s saving tremendous amounts of time.

*Research (link) shows, depending on circumstances, the use of AI tooling lowers productivity

Changing the narrative

Seeing these benefits I still feel uneasy when listening to talks or reading blogs, articles and LinkedIn posts about the impact AI has on our profession. The narrative is always around the benefits and/or risks of using the AI tools from the perspective that they are already a part of our toolset.

Most opinions regarding the use of AI tools follow one of three narratives:

-

Embrace, or go extinct

-

Use, but verify

-

Reject, stay smart

I think there is a truth in all of them, but they all assume AI is already here and ask whether we like it or not. Let’s step back and examine the fundamental changes AI tools have introduced.

Where are we now?

The question is not whether we, as software developers, should learn to effectively use AI in our daily work, but rather how integrated it will become. As it stands now, AI can be tremendously helpful, but there are also numerous examples where security or performance issues were introduced by AIs. This means even with the best prompts/models, we can’t let an AI write software unsupervised. Whether AI makes us dumber is a more opinionated statement, but I do notice I lazily rely on AI to write software I would have been happy to write myself not long ago.

In all three perspectives lies a fundamental misunderstanding about what it is that software developers produce.

Developing Software is much more than writing the code.

While developing software we are constantly engaging with the environment our software runs in.

-

We know the social context where our application solves a problem

-

We understand the domain our application lives in

-

We know the history and can predict the future of our applications

-

We have an understanding of the frameworks and libraries we are using

-

We write tests to verify certain behaviors

-

We have an understanding of how the applications we interact with behave

-

We know about the infrastructure our application will run on

-

We understand how critical (or non-critical) our application is

-

We know what value our software or the feature will bring when we finish it

Our role as a Software Developer is to struggle with all these things and understand which direction the line of code, the feature or sometimes even the whole application architecture should take.

The product of developing software is gaining these understandings and letting them guide current and future endeavors in the codebase. In that final commit we try our very best to formalize our insights, learnings and struggles into an elegantly written piece of well tested software, if necessary accompanied by comments, ArchitecturalDesignRecord’s and commit messages saving our reasoning for future eyes.

What has AI changed

An AI might be able to produce the same result, but there can be no parity with ungained understanding.

The introduction of AI tooling allows us to skip to a working formal notation to check in. Most of the time this will probably be a sufficient solution for the problem we posed, but we shouldn’t forget the part we are not producing anymore. We’re partly or fully skipping the process of understanding why the formal notation is the correct solution.

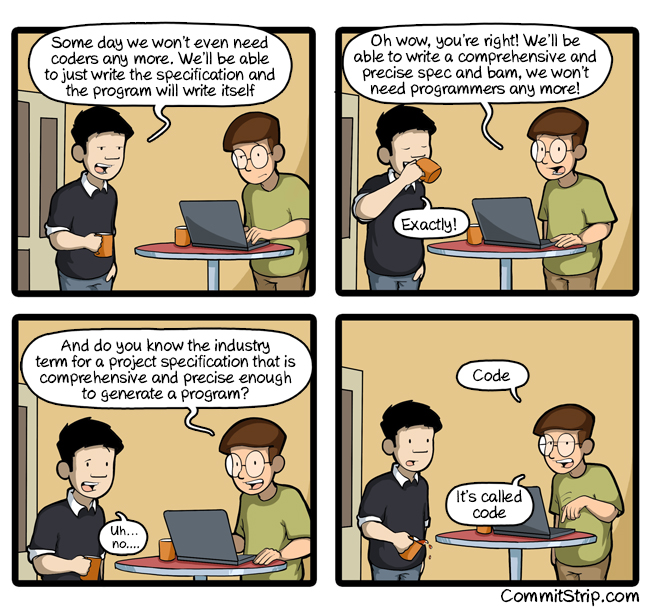

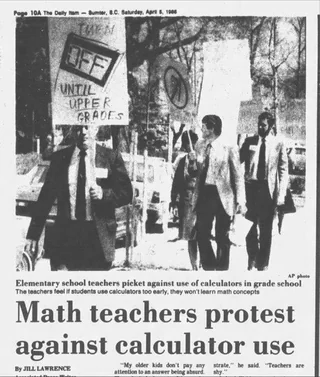

An analogy might help to clarify the difference further. When we are seeking the answer to a mathematical question, in ancient times a pen and paper were sufficient to find the answer. When the calculator was introduced (comparable with our IDEs) it changed the way of working fundamentally, but it didn’t change the approach of fundamentally understanding the question and formulating the solution before calculating the actual answer.

AI just gives us the answer without allowing us to truly formulate the solution. When we just want to find the total sum of prices on a receipt both approaches might be perfectly valid, but math wouldn’t be math if the formulated solution (e.g. Pythagorean theorem) weren’t often more important than the actual answer (e.g. the length is 5).

Interestingly both the introduction of the calculator and code completion have received pushback because passionate people feared a decline of knowledge and understanding in their craft. Both tools were eventually embraced, but the initial resistance sparked important discussions about their impact and how to preserve essential skills.

"Is AI already good enough?" is not the right question

I’m afraid the lack of producing true understanding is what will prove damaging to our profession in the long run. In fact, besides suboptimal code that looks good enough to pass a review, there have been incidents where AI-generated code has caused significant issues.

These developments have driven social commentary. In most articles and blog posts, even those in favor of embracing AI, the call to supervise the AI and understand its product is present. On the more playful side of criticism are developers changing their title to things like "Vibe Code Cleanup Expert".

Always reviewing what an AI produces is no substitute for truly developing a piece of software.

Our understanding of the software we produce is what makes us as software developers valuable. Trusting this understanding to be only in the context or "brain" of an AI erodes our value as software developers and introduces a big risk for the future, because we won’t be able to truly understand our own systems sufficiently.

The question that is often asked is whether or not the quality of AI-produced code is sufficient to reach production. I think (with supervision) it often already is, although I’ve seen plenty of generated pieces of code where the AI still missed the point completely.

Food for thought

My own fundamental questions lie not in the source code, but in the effect AI tooling has on our craft.

-

At what point (if ever) will the models (and supporting ways of working) become good enough to justify relinquishing our own understanding of the software we build and maintain?

-

Until we reach this point, the effects of AI might already start interfering with our understanding of the software we build, especially since the proportional shift of developers who learned to code without AI has already started. How do we prevent the decline of true understanding within our craft?